Bucket

The bucket tab allows configuring the buckets that are available to the app. Currently, it is only possible to save buckets within S3SPL's app context. Buckets created outside of S3SPL's app context but shared globally are visible and editable. However, if you change the bucket's sharing configuration to App, the bucket will no longer be visible to S3SPL.

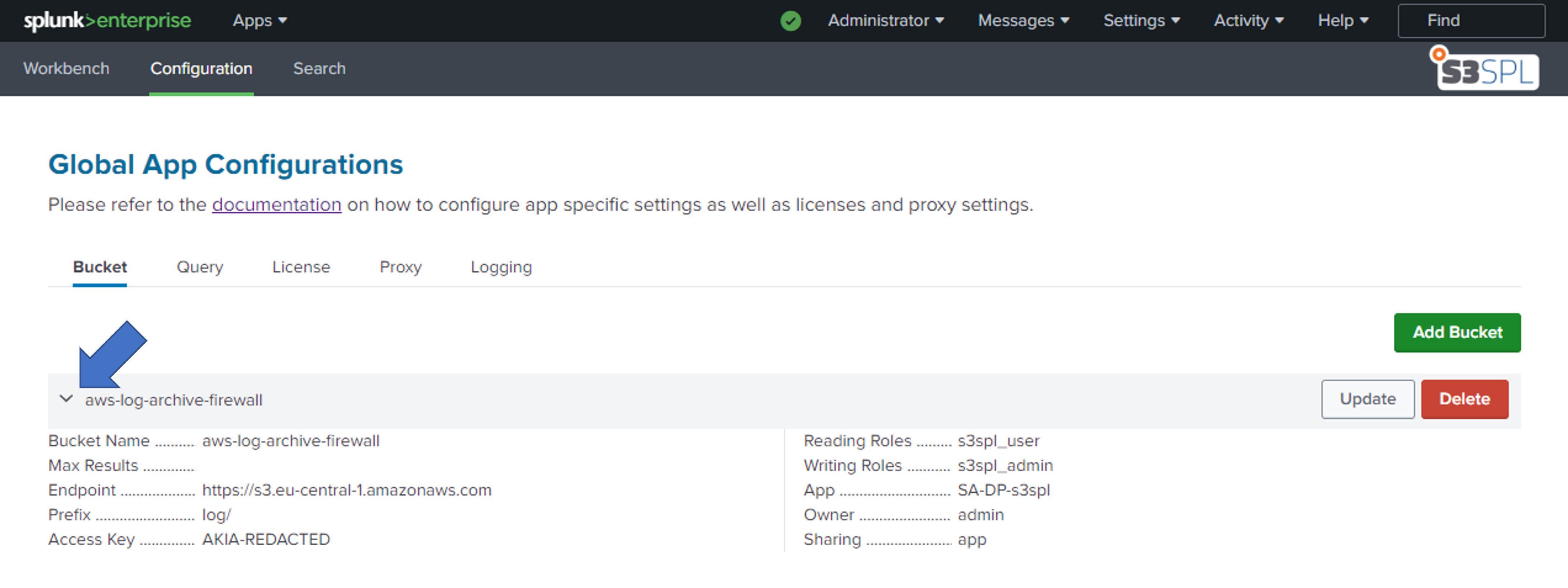

To see all parameters of a bucket, extend a bucket using the arrow on the left in the row of the bucket. To hide the details, click the same arrow again.

Extend to see all available bucket parameters

| Parameter | Description | Required | Example |

|---|---|---|---|

| Name | The name of the bucket configuration. This can be different from the name of the bucket in S3 | ✔️ | aws-log-archive-firewall |

| Bucket | The name of the bucket in S3 | ✔️ | aws-log-archive-firewall |

| Endpoint URL | The URL of the S3 endpoint. This can either be an AWS endpoint or the API of your S3 provider | ✔️ | https://s3.eu-central-1.amazonaws.com |

| Prefix | The prefix of the bucket. This is the path that is used to store the logs in the bucket. The prefix can be empty. See Usage for more information regarding prefix values | ✔️ | logs/ |

| Validate Certificate | Wheter the certificate of the endpoint should be validated. If the certificate validation is enabld but the certificate not valid the connection will fail. See On-Prem custom certificate on how to validate certificates by an internal CA | ✔️ | No |

| Access Key | The access key of the user that should be used to access the bucket. The user needs to have read access to the bucket | ✔️ | AKIA... |

| Secret Key | The secret key of the user that should be used to access the bucket | ✔️ | ... |

| Splunk IA Bucket | Whether the bucket contains files written by Splunk Ingest Action's routing to S3 | ✔️ | No |

| Timezone | The timezone of the bucket. This is used to create time based prefixes and adapt timestamps | ✔️ | Europe/Zurich |

| Max Results | The maximum number of results that should be returned by a query | ✔️ | 1000 |

| Max Results per File | The maximum number of results that should be returned per file by a query | ✔️ | -1 |

| Max Files Read | The maximum number of files that should be read by a query | ✔️ | -1 |

| Reading Roles | The roles that are allowed to read the bucket configuration and subsequently run queries towards it | ✔️ | s3spl_admin |

| Sharing | The sharing configuration of the bucket | ✔️ | App |

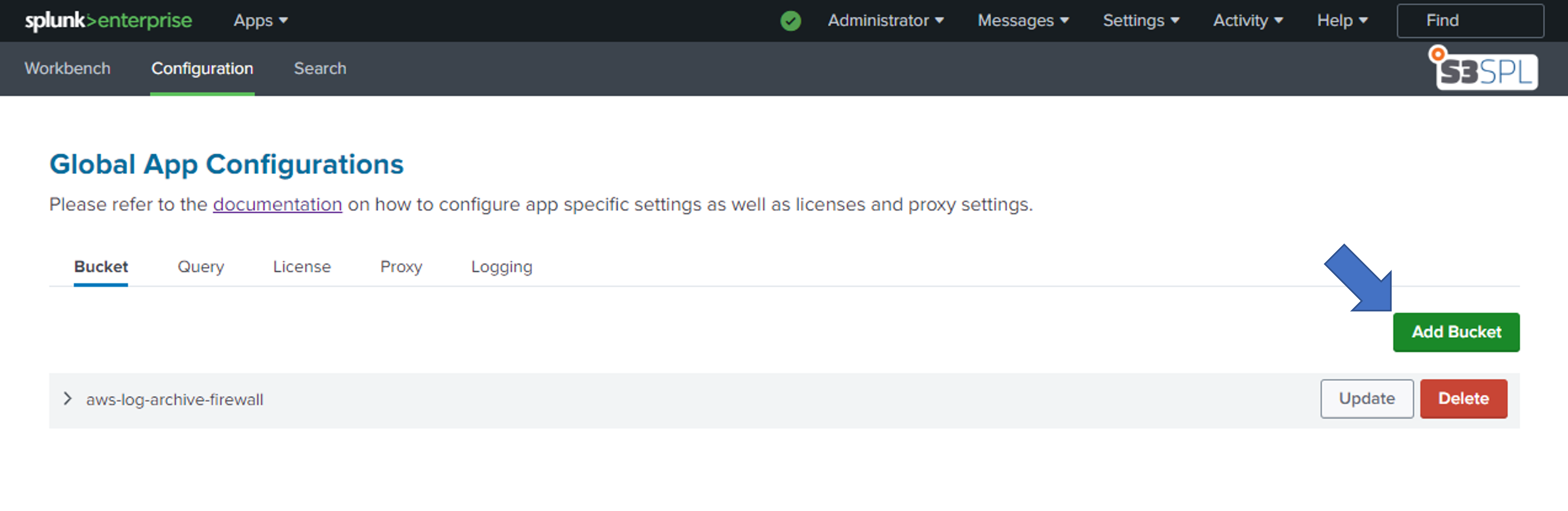

Creating a Bucket

The free license of S3SPL allows configuring a single bucket. The paid license allows configuring a higher number of buckets.

To create a new bucket, open the Bucket tab and click on the Add Bucket button in the top right corner. Fill out the form and click on Add. Check the Usage section for more information on the prefix options.section.

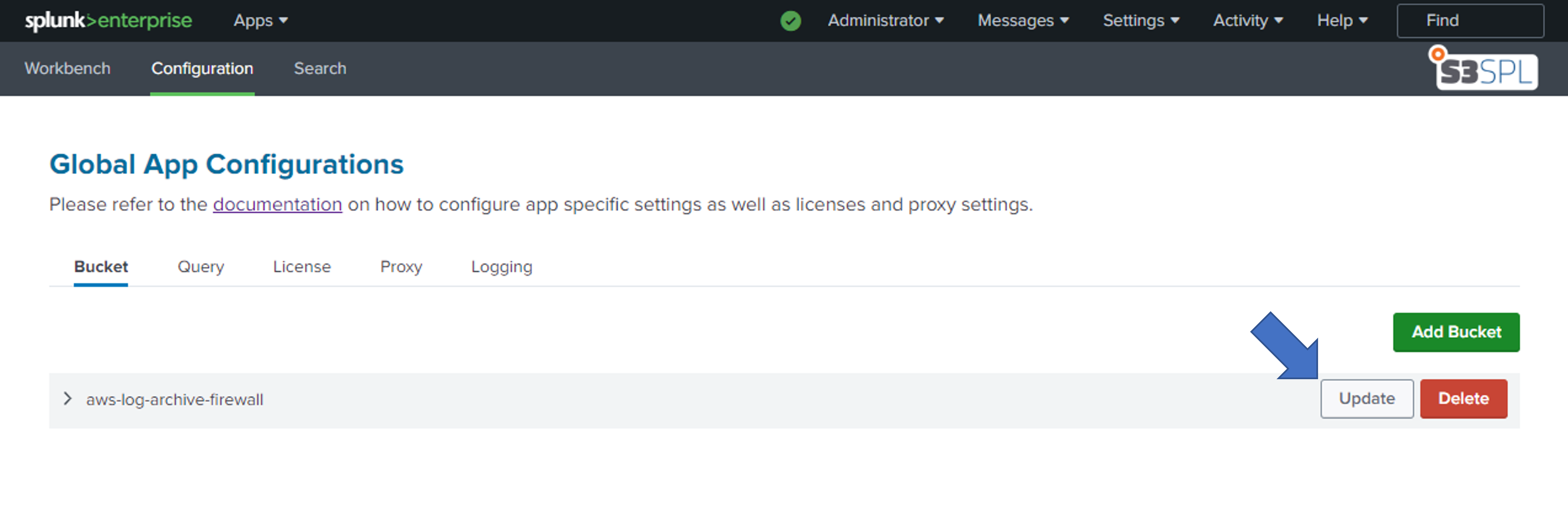

Updating a Bucket

To update a bucket, open the Bucket tab and click on the Update button in the row of the bucket that should be updated. Fill out the form and click on Update. Check the Usage section for more information on the prefix options.

It is not possible to change the name of a bucket. If the name of a bucket should be changed, the bucket has to be deleted and a new bucket with the desired name has to be created.

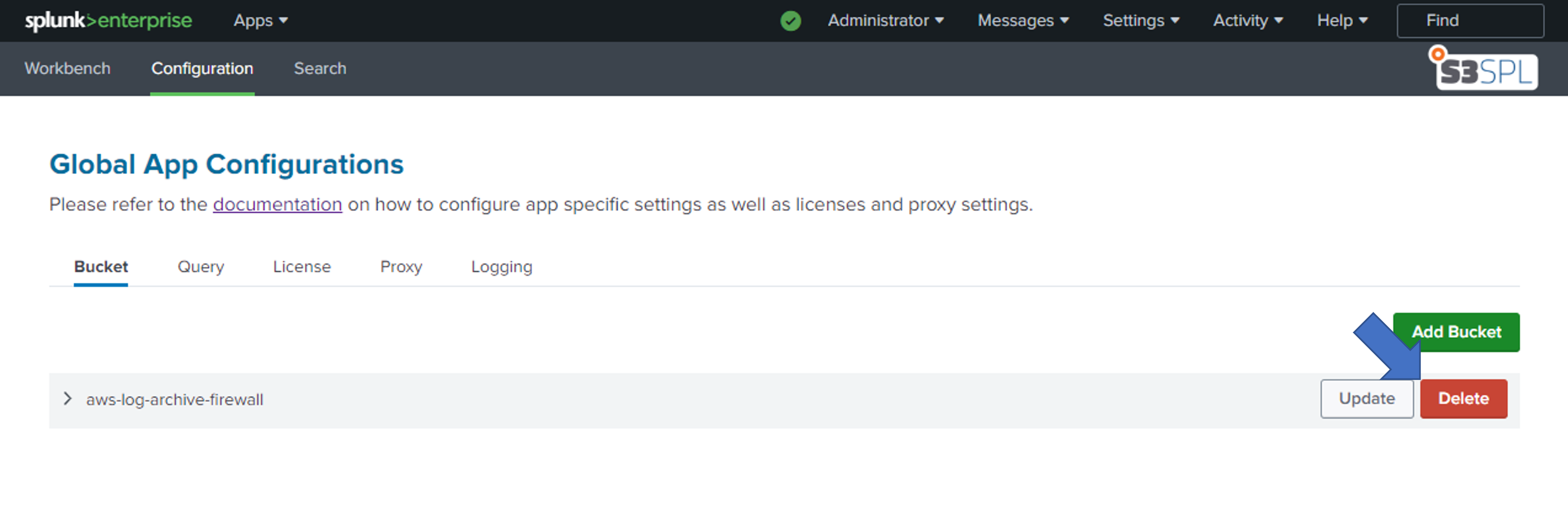

Deleting a Bucket

To delete a bucket, open the Bucket tab and click on the Delete button in the row of the bucket that should be deleted. Confirm the deletion by clicking on Delete.

Deleting a bucket is irreversible. The bucket will be deleted.

On-Prem custom certificate

We highly discourage users to connect from Splunk Cloud to On-Prem S3 storage providers. It is not possible to validate any non-public certificates from Splunk Cloud.

S3SPL uses the AWS SDK to communicate with S3 resources. To enable S3SPL / AWS SDK to validate your custom certificate, you need to add the certificate to the certificate store of the SDK.

- Get the CA certificate of your internal CA and all intermediate certificates that are required to validate the certificate chain. Save the certificates in PEM format.

- Add the certificates to

lib/botocore/cacert.pemin the S3SPL app directory. The file should look like this:

# Certificates bundled with the botocore library.

-----BEGIN CERTIFICATE-----

<CA CERTIFICATE>

-----END CERTIFICATE-----

# Your own CA certificate

-----BEGIN CERTIFICATE-----

<CA CERTIFICATE>

-----END CERTIFICATE-----

# Intermediate certificates

-----BEGIN CERTIFICATE-----

<INTERMEDIATE CERTIFICATE>

-----END CERTIFICATE-----

- The AWS SDK should now be able to validate your certificate. You can now enable certificate validation in the bucket configuration.

- Make sure to deploy the app with the customized

cacert.pemin case of a search head cluster.